This article was featured in the One Story to Read Today newsletter. Sign up for it here.

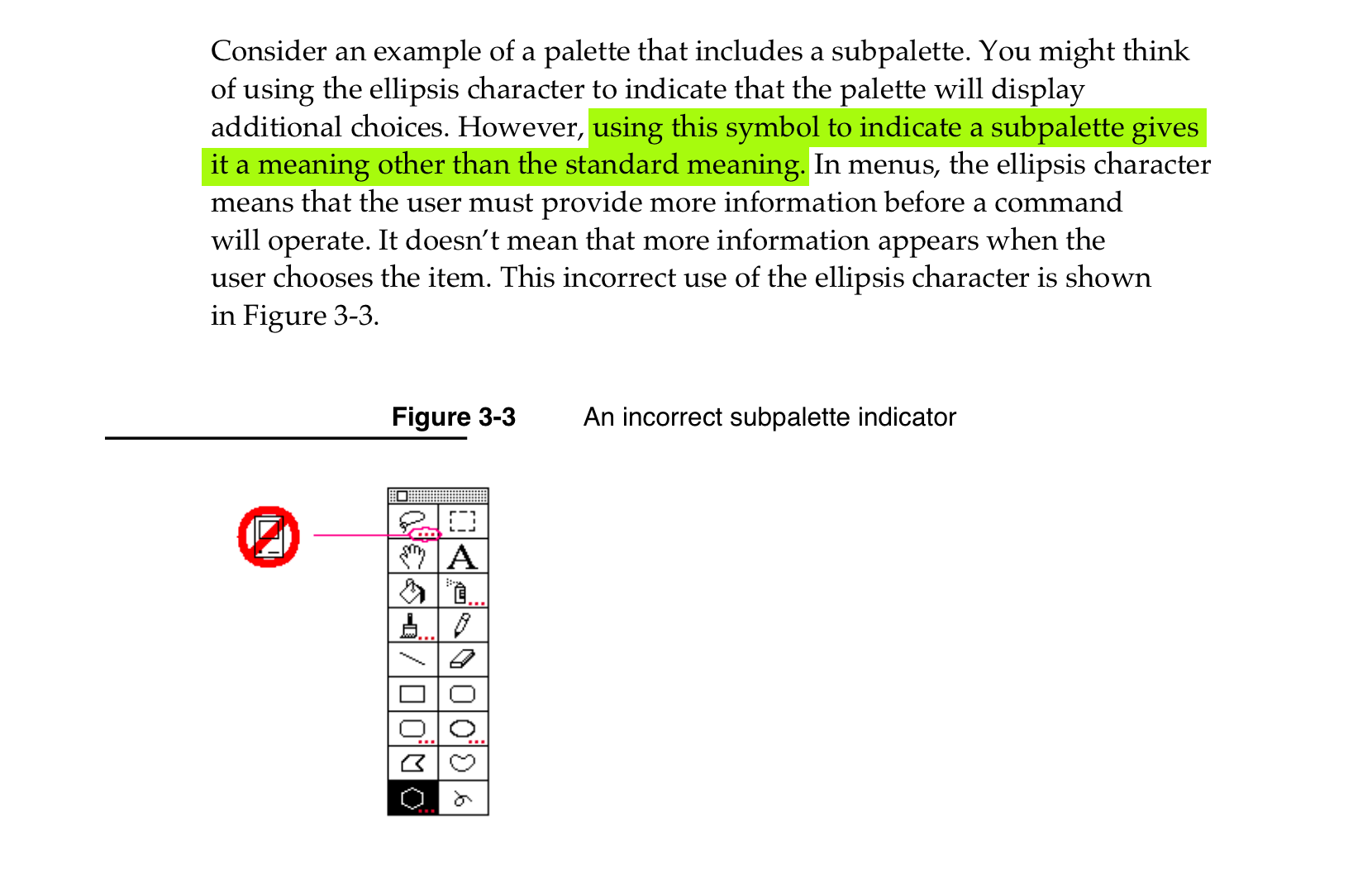

The birthday-party invitation said “siblings welcome,” which means you can bring your 11-month-old son while your husband is out of town. You arrive a little disheveled and a little late. Your 5-year-old daughter rushes into the living room, and you make your way to the kitchen, wearing your son in a sling. You find a few moms around a table arrayed with plates of fruit, hummus, celery sticks, and carrots—no gluten, no nuts, no Red 40. These parents care about avoiding pesticides, screen time, and processed foods, and you do too.

It’s a classic kids’ party: Tears and lemonade are spilled; mud and cake get smeared into the rug; confetti balloons are popped one by one, showering elated children in rainbow-paper flakes. Sunbeams through the windows illuminate floating dust motes—and, imperceptibly, microdroplets of mucus carrying the measles virus, expelled from an infected but asymptomatic child who is hopping and laughing among the others. Your daughter breathes that same air, inhaling the virus directly into her respiratory tract.

The infected aerosolized droplets will linger in the air for hours, which is partly why measles is among the most contagious diseases in the world. The virus infects roughly 90 percent of unvaccinated people exposed to it; the infected can then, in turn, infect a dozen to several hundred people each, depending on where they are and what they’re doing. Breakthrough cases are possible among the vaccinated, but they tend to be rare, relatively mild, and less likely to spread. A single dose of the MMR vaccine is 93 percent effective at preventing infection; two doses are 97 percent effective. Among the unvaccinated, one in five people infected with measles in the United States will require hospitalization, and roughly two out of every 1,000 infected children will die of complications, regardless of medical care.

Your daughter behaves normally over the next week while the virus slowly spreads inside her, infecting immune cells that carry it to the lymph nodes, where it replicates and spreads at a rapid pace. Your daughter is at school cutting alphabet shapes out of paper when the virus enters her bloodstream. But she doesn’t feel anything until she seems to come down with a cold—dry cough, runny nose, itchy and watery eyes—about a week after the party, because the virus has multiplied and descended upon her lungs, kidneys, tonsils, and spleen, down to the marrow of her bones. When she starts running a fever, your mind turns to the logistics of taking off work while she’s home from school. You’ve witnessed enough colds as a mother to not be worried about this one. You feel some confidence in your instincts when it comes to your child’s health, and you’ve grown skeptical of medical interventions. It’s why you and your husband decided to wait to vaccinate your kids, though you’re a little conflicted about it. You’re not an extremist. You’re open to new information. You can always change your mind, you reason. You’re just weighing the evidence.

Ten days out from the party, your daughter’s cold has worsened. Her throat is sore, her appetite is low, and she’s running a fever that sometimes ticks up to 104. Colds can be rough. You plant her on the couch with a blanket and put Bluey on the TV while she drifts in and out of sleep. You coax her to eat by offering ice cream, which she says feels good on her throat. She’s a tough kid, but you can tell she’s miserable—there are circles under her eyes as she complains of a headache, then grimaces when she coughs. You can feel with a tender touch that the glands in her neck are swollen and uncomfortable. Her fever still hasn’t dropped. After a few days, you experience the first tug of serious concern. On the phone, your mom suggests that it might be COVID, or maybe the flu. Push fluids, she says, and keep an eye on it. You put your daughter to sleep in your bed, in case she needs you in the night.

[Read: His daughter was America’s first measles death in a decade]

The next morning, you lay your hand on her forehead, and the heat of her skin sends a ripple of unease through you. The measles virus is attacking the cells that line her lungs and suppressing her immune system, rendering her vulnerable to secondary infections. You step away to feed the baby and put him in clean clothes, but then rush back when you hear her calling you in a strained, croaking voice, her vocal cords swollen and thick with mucus. You find her lying in bed with her hand over her eyelids and tear tracks on her temples. She asks you to close the curtains because the sunlight is hurting her eyes—the virus has triggered a case of conjunctivitis. In all of the colds you’ve nursed her through, she has never complained of pain triggered by light. When you rouse her to give her Tylenol, you see that the whites of her eyes are reddened, and the bases of her eyelashes sticky. You carefully clean her eye area with a damp paper towel, kiss her nose, then leave her to sleep it off.

While the kids are napping, you tap a list of your daughter’s symptoms into Google and find a slew of diseases that more or less match up, because fevers, coughs, and sore throats are common to many illnesses. You post about it to the parents’ group, where a few moms with ill kids offer solidarity, and others commiserate over similar episodes with their own children. One woman says it’s time to call the doctor. You’ve had friction with your pediatrician over vaccinations, but this mom may be right. Later that day, when your little girl is curled up on the couch with a cold chocolate-milk protein shake, you go to take her temperature and find that her face is dotted with a spotty red rash descending from her hairline. The virus has infected capillaries in her skin, which typically happens three to five days after the symptoms start, but you don’t know that. It doesn’t hurt, she says, though it’s itchy. Her fever remains high and unrelenting. You pull out your phone and type chicken pox symptoms into your browser, hoping that you’ve found a viable culprit. It sort of fits, so you hold off on calling the doctor.

But her condition does not improve over the next couple of days. Her cough wracks her whole body, rounding her delicate bird shoulders. She does not sleep well. And as you lift up her pajama top to check her rash one morning, you see that her breathing is labored, shadows pooling between her ribs when she sucks in air. You suffer an icy moment of realization: This is a medical crisis. What you will learn later is that the tiny air sacs inside her lungs have become breeding grounds for the virus, and the inflammation generated by her immune response is inhibiting oxygen from reaching her bloodstream. You don’t want to worry your daughter, so you try to sound calm when you call the pediatrician and describe her symptoms at a rapid clip. The receptionist responds gently, types swiftly, and then pauses. Are your children vaccinated? she asks. Her tone is flat and inscrutable, but you detect an undercurrent of judgment. You wince and tell her the truth. No, you say, no vaccines. She puts you on hold. While you wait, you take your son out of his high chair and wipe his runny nose with his bib.

The receptionist is back. She asks if you can be at the office within the hour. In an even, professional voice, she gives you a number to call as soon as you arrive, but tells you to stay in your car. The doctor, she says, will come to you.

You’re there in 30 minutes, unshowered and wearing sweatpants, with your daughter bundled up and shivering in her pajamas and your son fussing in his car seat. You call the office. From the car, you cannot see the sign on the pediatrician’s office door instructing patients with a list of symptoms like your daughter’s not to come inside. Flashes of the pandemic play back as you see the pediatrician and two nurses approaching in the rearview mirror wearing N95 masks. It hits you: This is not the flu. This is not chicken pox. This is serious.

You twist around in the front seat to watch the pediatrician as she leans into your car and begins her exam, asking you questions about symptoms and timing. A nurse takes swabs from the nose and throat, which will be sent for testing by the local public-health authority, then clips a pulse oximeter onto your daughter’s fingertip. The doctor leans in to lay the cold diaphragm of her stethoscope against your daughter’s back. The doctor tells her to breathe. You tell her she’s doing a great job, and reach back to pet her knee. The doctor hears crackling with every breath your daughter takes, as air moves through the fluid trapped in her lungs. The oximeter reveals that her blood is only 90 percent saturated with oxygen, well below the healthy range of 95 to 100 percent. The pediatrician tells you to drive directly to the hospital. Your daughter is in pain and bewildered and afraid, but you tell her everything is okay; you’re just going to see a different doctor. Your son is fussing in his car seat. You try to keep your voice even, though your heart is pounding.

While you drive a little too fast to the emergency room, the pediatrician’s office calls the hospital warning them that there is a suspected measles patient on the way, and then places a mandatory call to the public-health authority notifying them of your daughter’s condition. Once you arrive, things happen quickly. Because measles is what researchers call a high-consequence infectious disease, health-care professionals undertake a series of strict protocols to limit its spread. You and your daughter are fitted with masks before you are brought in through a side door to avoid contaminating the waiting room, and then herded into an isolated negative-pressure room designed to prevent the aerosolized virus from traveling into the hall. After hospital workers whisk your daughter away for an emergency X-ray, they will shut down the areas of the radiology department for six hours to carry out decontamination measures, a thorough process protracted by the virus’s capacity to cling to walls and linger in the air. While your daughter gets her scan, you try to soothe your son, whose forehead begins to feel worryingly hot to you.

Your daughter looks so small in her hospital bed, her face fitted with an oxygen mask. Nurses collect blood and urine; you hold the cup as she shivers on the toilet, then stroke her hair as the needle spears her vein. When you’ve regained some composure a couple of hours later, a doctor comes to speak with you. This is the first time anyone has used the word measles. The doctor tells you that your daughter has pneumonia, a complication arising in roughly 6 percent of measles cases, though some researchers suspect that the actual rate may be higher. There is no cure for viral pneumonia from measles, but the hospital will provide supportive care to treat the symptoms, including her scalding fever and rash. The doctor doesn’t tell you then that pneumonia is the most common cause of death in measles patients. You will learn that later on.

The swabs taken by the pediatrician test positive for measles, and your child’s case becomes a data point in an outbreak. Each measles patient can infect a dozen or more unvaccinated people, and cases in your community are multiplying rapidly. A public-health official comes to gather information for contact tracing, and asks you to think of everyone your child has interacted with in the past couple of weeks. You think of her class at school, the grocery store, the car wash where you wait indoors, the birthday party.

Articles will soon appear in the local newspaper asking people who may have visited the post office or Target or the indoor playground on various days during various time frames to call the public-health office. Your child’s school will send out emails asking that parents keep unvaccinated children at home for the next three weeks, the virus’s maximum incubation period. As the outbreak spreads, local pediatricians will offer the MMR vaccine to children younger than a year old, because unvaccinated infants are especially vulnerable to the disease. The exponential growth in measles cases in the area attracts media attention, recriminations, and questions about blame.

Not that you notice. You practically live in the intensive-care unit as your daughter slowly recovers. When they discharge her a week later, they send instructions for at-home care, including hydration, decent air humidity, and plenty of rest. The disease will leave her with a lingering cough and occasional wheezing, and it will take months for her lungs to fully heal. She will fall behind in school and need tutoring to catch up, but all of these complications will seem trivial after you’ve come so close to something so dark that you can barely contemplate it. In the meantime, and until her rash heals, your daughter’s doctor insists that she remain under quarantine at home—along with your son.

Given your son’s fever, runny nose, and evident discomfort, you feel a grim sense of resignation when his measles test comes back positive. You are, however, alarmed when you discover there’s nothing his doctors can do about it. Had he been seen by a doctor within 72 hours of his first exposure, they could have given him a prophylactic dose of the MMR vaccine to protect him from infection. But it’s too late for that now. And you couldn’t have known then, anyway—when he was exposed, your daughter wasn’t symptomatic yet.

You feel uneasy caring for your son at home, having witnessed what the infection did to your daughter. But he is medically stable for now, and isolating him at home will limit the spread of the disease. You anxiously wonder whether you’ll know when his needs turn critical, particularly because he is too young to tell you how he feels. He cries inconsolably, unlike your daughter, and sometimes screams. After his rash appears, you notice when he wakes from a nap that pus has drained from his ear onto his crib sheets. You will learn later that an opportunistic bacterial infection has taken advantage of your son’s suppressed immunity by setting up in his middle ear, causing inflammation and fluid buildup to burst his eardrum. You call the pediatrician’s office, and they patch you through to the doctor. You wait for her in the same spot in the parking lot as last time. She diagnoses your son with a severe ear infection and prescribes antibiotics. On a superstitious level, you think this means nothing else bad can happen.

But within a few days, your son’s fever will spike as high as 105 degrees. The virus will break through his underdeveloped blood-brain barrier and begin attacking his brain matter directly, leading to primary measles encephalitis. The condition is rare among older children but more common in infants, who are also more likely to die from measles. You will panic and call an ambulance when he slumps over unconscious on the floor, and another swarm of doctors and nurses will descend upon your child and whisk him away deep into the building while you trail behind as closely as you can. Like your daughter, your son will need supportive care, but he will also need close monitoring of the pressure inside his skull. While your husband stays home with your daughter, you keep vigil at the hospital for as long as you’re allowed, sometimes sleeping in the car to avoid missing any time squeezing his little hand. Days pass, then a week, two weeks. The nurses are kind. There are now several other children in the same hospital unit suffering from measles complications, some of them tethered to ventilators.

Somehow, your son recovers well enough for you to take him home. He has lost some of his hearing, but the doctors say that he could make a full recovery in a matter of months. It is hard to describe the gift this is, the relief you feel. Most children infected with measles will survive the virus, but 30 percent of cases lead to complications, and it is nearly impossible to predict which patients will be affected.

[Katherine J. Wu: The only thing that will turn measles back]

Your children seem so fragile as they recover over the next year, but then the four of you are back to your usual adventures. For roughly eight years, you will believe that your family made it through this crisis without suffering a tragedy. You marvel at your good fortune, and feel a rush of gratitude the day your daughter returns to school and life resumes its normal rhythm. But years later, when your baby is in fourth grade, he will begin struggling with subjects he had once mastered. His teachers will ask to speak with you about how he is suddenly acting out in uncharacteristic ways.

You will not think of his measles infection when he begins suffering muscle spasms in his arms and hands, nor when his pediatrician recommends that you see a neurologist. You realize you have entered a new nightmare when nurses affix metal electrodes to your son’s scalp with a cold conductive paste to perform an electroencephalogram to measure his brain waves. As the neurologist examines the results, she will note the presence of Radermecker complexes: periodic spikes in electrical activity that correlate with the muscle spasms that have become disruptive. She will order a test of his cerebrospinal fluid to confirm what she suspects: The measles never really left your son. Instead, the virus mutated and spread through the synapses between his brain cells, steadily damaging brain tissue long after he seemed to recover.

You will be sitting down in an exam room when the neurologist delivers the diagnosis of subacute sclerosing panencephalitis, a rare measles complication that leads to irreversible degeneration of the brain. There are treatments but no cure, the neurologist will tell you. She tells you that your son will continue to lose brain function as time passes, resulting in seizures, severe dementia, and, in a matter of two or three years, death. You look at your son, the glasses you picked out with him, the haircut he chose from the wall at the barbershop, the beating heart you gave him. You imagine your husband’s face when you break the news, the talks you will have with your daughter, your mother, your in-laws—though there is no way to prepare for what is coming. And you know that you, too, will never recover.

This story is based on extensive reporting and interviews with physicians, including those who have cared directly for patients with measles.